Unleashing Productivity with Microsoft Copilot in Teams: A Comprehensive Guide

By Craig Pilkenton

VP, Technical Delivery

Overview

Over the last few quarters, Microsoft has released a series of AI (Artificial Intelligence) companions within the Microsoft 365 ecosphere that enable users to complete a wide range of duties faster and more efficiently, taking over tasks that can make life more efficient. There are many daily and weekly tasks we spend too much time on in the Office 365 suite that cost us time, creativity, and the ability to innovate.

A recent report from Forrester, Build Your Business Case For Microsoft 365 Copilot, predicted that 6.9 million US knowledge workers, or approximately up to 8% of the total group, will be using Microsoft 365 Copilot by the end of 2024. Of those, Forrester expects about a third of Microsoft 365 customers in the US to invest in Copilot in the first year, providing licenses to around 40% of employees during this period. Utilization of AI toolsets such as LLMs (Large Language Models), code creators, and chatbots allows users to minimize repetitive work and focus on new, creative endeavors.

What is a Copilot

Microsoft Copilot Studio, formerly known as Power Virtual Agents, combines Power Platform's Low Code interaction toolsets with Azure's OpenAI capabilities. This toolset now combines the power of LLMs with data that lives within Microsoft 365. Copilot is now integrated into common applications such as Teams, Excel, and Word to unlock higher levels of productivity and complete tasks faster.

Copilot Studio can help us create powerful AI-powered chatbots for requests ranging from providing simple answers to common questions, resolving issues requiring complex or branching conversations, and even interacting with other services on our behalf. We can engage with our employees across Microsoft Teams, websites, mobile apps, email, or any channel supported by the Azure Bot Framework.

How it can be used

Copilots can be created within any organization, enabling developers to deploy interactive solutions with a speedier time-to-delivery.

There are several enterprise usage scenarios where Copilots can be created to enable faster innovation for end users.

Employee handbook and benefits searches

Requesting vacation time off

Submitting help desk tickets

Searches for previous work products

Reaching out to 3rd Party APIs for information

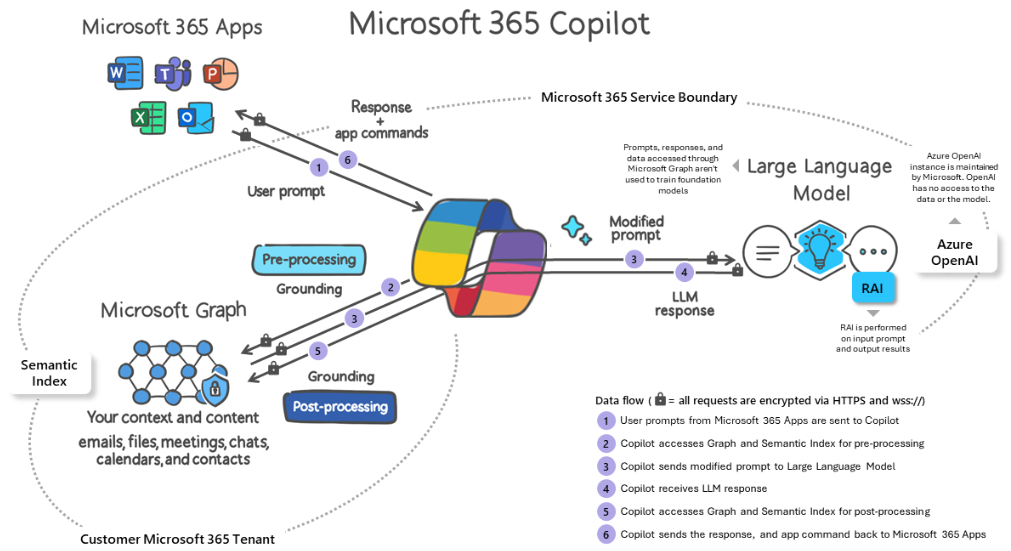

Learn.Microsoft.com - Microsoft 365 Copilot Overview

From this diagram, Microsoft 365 Chat enables Copilots to leverage cross-application intelligence by simplifying workflow's across multiple toolsets. Copilots uses the power of foundational LLMs, organizational data we supply, and specifically indexed websites to build out a cognitive 'brain' and 'memory' infrastructure to post questions to. The user's text prompts are then applied against this infrastructure, and finally generates possible responses. We then take these responses and map them to our own branching logic to direct conversations with the user. These responses can then be used to create interaction options where common tasks can be automated.

Understanding and mitigating the risks

As with any new technology implementation for your end users, it is important to understand both the capabilities and possible risks. In the case of utilizing an AI toolset such as Copilots, there are two primary business risks that could derail a successful implementation.

The first risk to mitigate is the potential for the generative AI to hallucinate or make guesses against the implementation data, providing inaccurate information to users. This information can become canon within the organization, sending the business down the wrong path. This risk is especially worrisome when it comes to analyzing financial data that could drive business plans. The best mitigation tactics for this issue are to set the LLMs' confidence rating to its highest level and then have solid test plans as the AI is implemented.

These tests help validate possible returned responses and ensure they match not only the data sources being used but also the combined result of all the data sources, as the generative AI will interpret an answer based on the LLMs algorithms.

The second risk to watch out for is the language models' access to corporate data that's not secured properly. Allowing the LLM to ingest improperly locked-down data sources can expose the organization to data leakage.

The optimal method to solve this is to be very targeted on the data sources the Copilot will access or anonymize as needed, ensuring they are allowed for all chatbot users to see. These methods will ensure that the chatbot is not oversharing sensitive data.

Proper security for the solution is a constant risk to understand and implement. When building a Copilot and setting up security, it defaults to Teams authentication based on the Channel of the same name, so it is secure and deployable within your Microsoft 365 Tenant. While there are nearly a dozen other Channels to select from, security planning for those Channels that an organization may want to publish to is paramount.

Planning implementation

With the understanding of what Copilots can do for us, we move on to the next stage. Success lies in good planning at the beginning of an AI endeavor, just as you would with any enterprise application development. Part of the planning process with this type of toolset is deciding how the chatbot will behave and interact with your end users.

From a high-level perspective, there are three major types of bot behaviors that we can implement:

'Searcher': the bot completes searches for users from keywords they select or type in, using pre-set data analyzed by generative AI

'Router': the bot asks pre-set question flows from the user, then routes them to a final answer or to a help desk team member

‘Tasker': the bot allows for selecting specified actions and then completing tasks for the user, such as submitting help desk tickets, vacation submittals, or any other automation task

While we can mix and match the above behaviors as needed, the Copilot will be a Searcher in this case, so we need to document the data sources we want users to search. The next focus is on the Topics and keywords to be set up per data source so users are correctly routed to their appropriate Topic. Then, we begin mapping out any internal branching logic, how results will display back to the user of their searches, and finally, building out how they can intuitively restart or jump out of a specific conversation.

Determine data sources

We focus on data sources as the first step when choosing the content we want enabled for end users to search, building up or uploading our source content for the Azure OpenAI LLM to use. Part of this step is also making sure our organization's data is ready for AI. As mentioned in a previous Hylaine article, Data Transformation: Paving the way for AI Success in Your Organization, we need to use a data maturity model to ensure the efficacy of our AI workloads. This will ensure that the results returned are correct and appropriate for our end users' needs.

In this case, we are using data sources that our organization has curated for some time. These sources are well suited for generative AI use, returning accurate results.

Employee Handbook covering all the information on working at Hylaine

Hylaine.com containing all of our published Insights content

Our Technology Portfolio of recent projects

Just for fun, integration with Icanhazdadjoke.com, which has a rich API for pulling Dad Jokes on-demand

This content contains a large amount of targeted content about our organization with high data maturity and how we deliver work, making it a great tool to implement self-service for our employees. Note the setting below the data sources we ingested instructing the generative AI to only return responses with a high confidence level, minimizing hallucinations that may occur, as noted above.

Create a test plan

Our second and, arguably most important, step is to create a test plan against the data sources that is extensive enough to validate the results returned and ensure that the risk of incorrect results is minimized as much as possible.

Example Test Plan

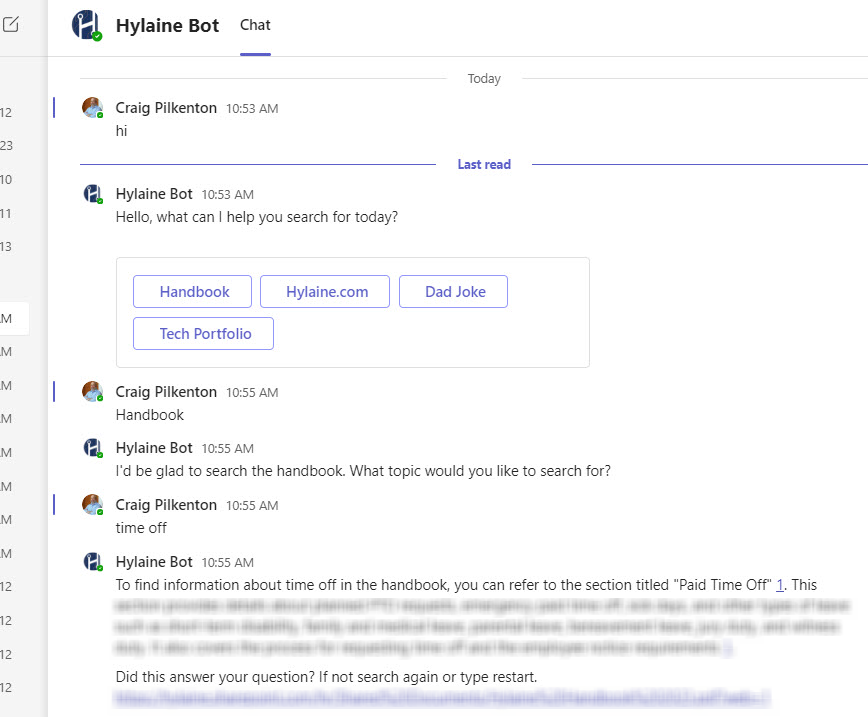

Greeting

Shows the 4 Quick Click options

Routes successfully to correct topic chosen

Start Over

Shows the 2 Quick Click options and routes successfully to correct choice

Yes - Greeting comes back up

No - continues being able to search in their topic

Exit

Brings up the message you can simply close the window.

Handbook

Vacation - returns results on "Paid Time Off"

Medical - returns results on benefits section

Hylaine.com

xxx - Details and Insights article on zzz

xxx - The zzz page comes up

xxx - Returns project reference along with zzz article

Hylaine projects

xxx - returns client zzz

xxx - 2 citations

xxx - 2 examples

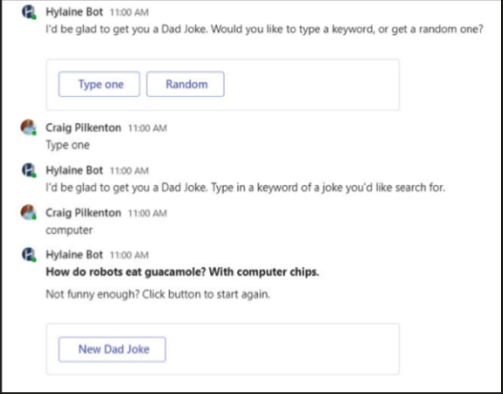

Dad Jokes

Shows the 2 Quick Click options and routes successfully to correct choice

Keyword search - returns a joke

Random - returns a joke

Creating a good test plan before beginning development will ensure that the chatbot returns correct results as planned and reinforce understanding of how the generative AI will incorporate our data sources into its LLM and give the user responses that match expectations.

Topics and keywords

The next step is to create Topics that will map to the chosen data sources. These are discrete conversation paths that, when used together, allow our users to have a conversation with the chatbot that feels natural and flows appropriately. While mapping from data sources to Topics may not be one-to-one, it is set up that way in this case.

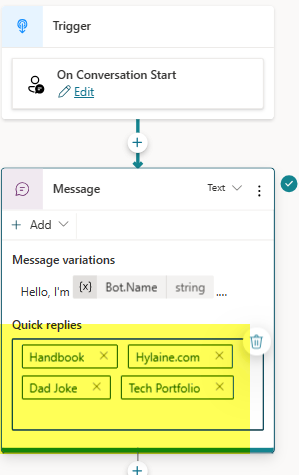

To maximize time savings with the chatbot, we should add several Quick Replies that match our Topics/trigger phrases in our Conversation Start System node so users only have to click to begin, not type it in. This option allows us to control the conversations and flow efficiently.

For each Topic, we start looking at the keywords and phrases that a user will likely select or type in to trigger a conversation path. We can have up to 200 topic triggers per Topic, but we should definitely be able to put in enough well below the threshold our audience will use.

Once our trigger keywords are entered, we add nodes to direct the chatbot on how to respond to matches and what next steps should happen. The most common implementation pattern of nodes can be best summarized by deploying the three repeatable steps below.

Question > Create Generative Answers > Message

Question: This node allows us to show question text, capture what the user types in or selects from several formatted data types (e.g., multiple choice, date, number, etc.), and save the input into a variable for later use

Generative Answer: This node takes the user input variable and then sends it to the LLM of our pre-set data sources to evaluate and create a formatted response

The AI prepares the formatted response and outputs it on the screen with citations of the correlated data sources.

Note: We can set extra or different data sources for this specific answer node to correlate information from

Message: This node is our follow-up to the user asking if this answered their question, type again, or restart the conversation

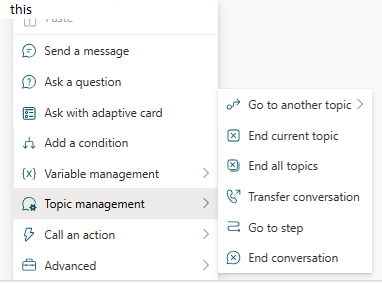

Navigation flows

The final work on our Topics is to determine if branching logic of nodes will be needed based on how users might answer questions or on the information needed to gather to pull results from the AI engine.

This allows the chatbot to handle a myriad of conditions depending on the data and then deliver different result sets to the user.

In this case, in the Question node we set the input to be multiple choice Quick Choices to help drive the conversation instead of asking the user to type the keywords we need to integrate with the 3rd Party API Icanhazdadjoke.com we are hitting. The conditions allow us to ask further questions and then set specific variables to control the API parameters. At the end, the branches come back together to make an HTTP Request for us based on the set variables.

From the branching logic of whether the user wanted a random joke or to search by keyword, we receive our response from Icanhazdadjokes.com and display it in a Message node. Note that when making an external API call, we bypass utilizing the generative AI engine, so the results won't be co-mingled. Instead, just the API response we pulled from the result is shown here.

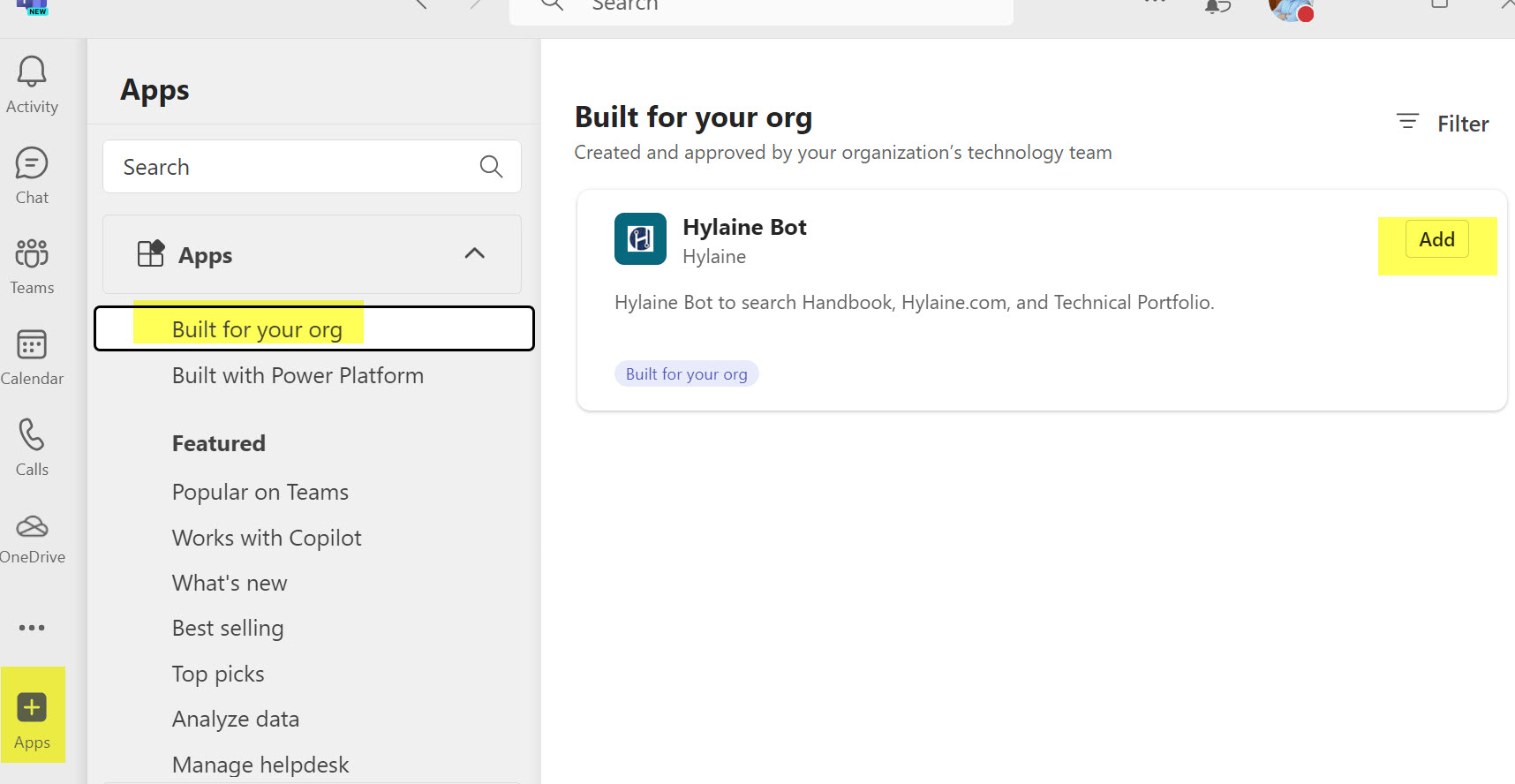

Publishing

Once all the development work is done, the last steps are to publish the chatbot into the target Channel(s) for ourselves initially, clicking the Publish button at the root level of the Copilot Studio. This will deploy to Teams for the developer, who should then run through the test plan again to validate functionality. It will also send a request to the Teams administrator for evaluation and review. You may need to follow up with the administrator as the Teams system may not always send a notification email.

Once a Teams administrator has reviewed the request, updated any security policies, and published for the organization, every end user can now go into the Apps menu in Teams to add a reference so they can use the chatbot. The chatbot is now available for everyone in your organization to add, depending on the security the Teams administrator utilized.

Looking Forward

Over the next year, Microsoft will continue bringing Copilot to all their productivity apps, such as Word, Excel, PowerPoint, Outlook, Dynamics 365, Viva, Power Platform, and more. These toolsets aim to unleash creativity by eliminating repetitive or mundane tasks, unlock productivity through minimizing busy work, and level up information worker skills. By deploying these tools through several different channels within the Microsoft 365 ecosystem, they hope to drive adoption where users do their work.

Copilot may begin changing how people work with AI at a fundamental level and even how AI will work with end users themselves. As with any new toolset or way of doing work, there will be a learning curve, but those who dive in now to take on this new way of working will quickly gain an edge.

References

Microsoft Copilots

https://www.microsoft.com/en-us/microsoft-copilot

Data Transformation: Paving the way for AI Success in Your Organization

https://www.hylaine.com/whitepapersblog-posts/prepareforai

Forrester - Build Your Business Case For Microsoft 365 Copilot

https://www.forrester.com/report/build-your-business-case-for-microsoft-365-copilot/RES180016

Icanhazdadjoke.com

*************************************************************************

https://www.microsoft.com/en-us/microsoft-copilot

https://blogs.microsoft.com/blog/2023/03/16/introducing-microsoft-365-copilot-your-copilot-for-work/

https://www.zdnet.com/article/what-is-microsoft-copilot-heres-everything-you-need-to-know/