Measuring ROI Success for Artificial Intelligence Projects

CRAIG PILKENTON

VP, Technical Delivery

In the rush to develop AI initiatives to maximize productivity for their teammates and value for their customers, many organizations have not planned for a return on investment (ROI) before starting. By building and following a standardized framework for tracking and measuring, initiatives can be evaluated to see if they are returning value to the company and which ones need to be re-evaluated.

Generative AI platforms (genAI) are being looked to these days as the next efficiency game changer by businesses, a new critical driver of business transformation. Organizations are looking at these toolsets to assist employees with data-driven decisions, optimize or reduce time on existing processes, and unlock new expansion opportunities. But as the number of these genAI requests and investments continue to rise within organizations, determining and then measuring a return on investment (ROI) in the planning stage is crucial to ensure the initiatives will be able to deliver true, tangible value and justify their resources. That said, with the broad range of AI projects and applications available, realizing ROI can vary significantly.

The number of companies currently experimenting with the automation capabilities of genAI is expected to double from its current levels such that by 2030, companies are expected to spend $42 billion a year on genAI projects that cover chatbots, research, email writing, and summarization tools. The technology has been targeted as a productivity multiplier, but wrapping hands around the ROI of genAI could prove to be elusive, especially for indirect outcome returns. This can be especially true if it is not planned for at the onset, just like any other solution implementation.

Defining ROI in the Context of AI Initiatives

“Capturing and measuring exact productivity improvements has been a challenge for many clients,” said Rita Sallam, a distinguished Vice President Analyst at Gartner. “For [genAI], we are not saying that finding ROI may be difficult, but expressing ROI has been difficult because many benefits like productivity…have indirect or non-financial impacts that create financial outcomes in the future.”

These indirect outcomes, or second and/or third order returns that are difficult to initially measure, can be from different types of genAI implementations.

Code generation automation to make software development teams more productive, releasing features to customers faster

Call center transcription analysis in real-time to look for answers as questions are asked, including customer sentiment analysis for escalations and historical analysis of transcriptions looking for common questions or patterns to address

Integrated assistants to help with email summarization and creation, data analysis, or report writing

Internal-only chatbots for teammates to ask common procedural or process questions from handbooks and systems manuals

As you can imagine, these ROI outcomes are indirect and, therefore, difficult to measure. They may result from effects derived from increased feature delivery in solutions or time saved per week in handling repeatable tasks. That is why defining and then measuring ROI is crucial to ensure these initiatives deliver real value and justify their resources.

Why Measuring ROI is Crucial for AI Projects

Measuring ROI is critical to any successful AI strategy as it allows organizations to justify the significant upfront investments often required for these initiatives, including technology, talent, and infrastructure. By calculating the value expected to be delivered, value can be shown, and ongoing support can be garnered from stakeholders, ensuring the long-term viability of your AI projects.

Measuring ROI enables the organization to prioritize genAI initiatives based on their performance and potential impact, as not all genAI projects will be equal in outputs and effects. By comparing ROI of initiatives, enterprises can allocate resources to those that deliver the most outstanding value, prioritizing the most impactful projects, and maximizing the return on the investments.

According to Gartner research, measuring ROI is crucial because by 2025, 90% of enterprise deployments of genAI will slow as costs exceed real or perceived value. Along with decelerating, they estimate that 30% of those projects will be abandoned after the proof of concept (POC) phase due to inadequate data quality, insufficient risk controls, escalating costs, or unclear business value. Additionally, Gartner estimates that by 2028, more than 50% of enterprises that have built large language models (LLMs) from scratch will abandon their efforts due to costs, complexity, technical debt, and not addressing a business need in their deployments.

Effectively communicating the ROI of AI investments is essential for building trust and buy-in among stakeholders, executives, employees, and customers. Measuring ROI for genAI is a challenge but is a critical step in ensuring the success and sustainability of AI strategies and maximizing value of the investments to drive long-term business success.

Understanding and Measuring ROI in AI Projects

So, what is the best way to define ROI in genAI projects when it may be quite difficult to calculate or measure? It involves implementing a structured approach that incorporates defining specific aims, identifying measurable and trackable features, and then properly tracking the initiatives successes or failures. The following framework tenets are high-level categories to create a framework that can help to ensure areas aren’t missed.

Core Tenets for Measuring ROI:

Set Clear Objectives

These genAI objectives should be measurable and align with business strategy, addressing specific organizational challenges or opportunities.

Identify KPIs (key performance indicators)

These metrics should be directly tied to the objectives to provide a comprehensive view of the project's performance, selecting metrics that are relevant, measurable, and aligned with business goals. These should, at a minimum, include cost savings, revenue growth, process efficiency, and customer satisfaction.

Complete an Upfront Cost Analysis Estimate as a Baseline

Using cloud cost calculators and any enterprise costing guidelines, complete a cost estimate to use for projected versus actual costs that cover 3, 6, and 12 months out. Make sure to consider the following types of costs:

Upfront costs: software, hardware, talent

Ongoing costs: maintenance, training, updates

Hidden costs: integration, data management

Track and Collect Data Properly

Implement systems and processes to track and collect the necessary data throughout the AI project lifecycle against the defined KPIs, which is crucial for monitoring progress.

This can involve integrating the solutions with existing data sources, setting up new data governance frameworks, implementing Data Reliability Engineering (DRE) to ensure consistency of data collection, or even qualitative assessment methods such as surveys or feedback mechanisms.

Calculate ROI Using Collected Data Against KPIs

With all the previous pieces in place, organizations can measure their initiatives over time and calculate an ROI that aligns with the organization’s methodologies.

ROI is usually calculated as a percentage and is done by dividing the net benefits of an initiative by total costs incurred. Net benefits in this case would include cost savings, revenue growth, and other measurable gains while costs encompass expenses such as technology investments, personnel, and training.

While not an all-inclusive set of tenets, starting with these high-level areas gives a way to define, track, and calculate ROI in a meaningful way to stakeholders and the business that can show value.

Successful AI ROI Examples

Many of these larger genAI initiatives have been running since 2023, when the first LLMs came out to the public, possibly without first defining ROI metrics. But have any been successful at returning value to stakeholders? Based on results in “The State of AI in Early 2024” from Mckinsey & Company, there has been some value being realized by businesses:

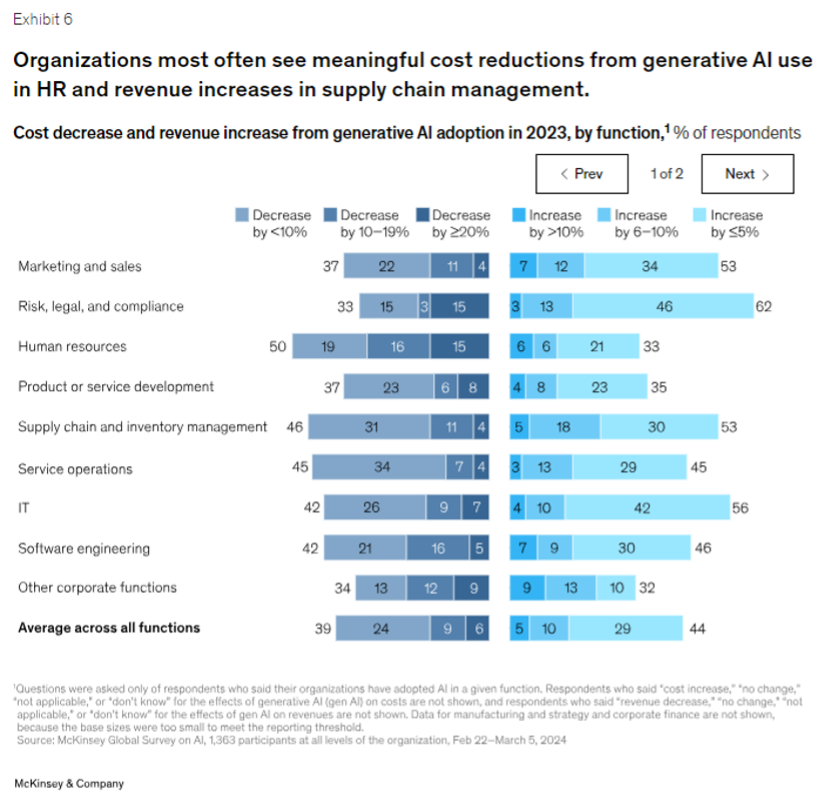

The function in which the largest share of respondents report seeing cost decreases is human resources. Respondents most commonly report meaningful revenue increases (of more than 5 percent) in supply chain and inventory management (Exhibit 6). For analytical AI, respondents most often report seeing cost benefits in service operations—in line with what we found last year—as well as meaningful revenue increases from AI use in marketing and sales.

Mckinsey & Co. “The State of AI in Early 2024”

Based on these data trends so far, it has been seen that measurable ROI can be realized in a form that allows stakeholders to see that value.

Measuring the ROI of AI initiatives for long-term success requires ongoing monitoring, analysis, and evaluating optimizations that can show those returns. Following a structured approach of core tenets for measuring and regularly evaluating the performance of the initiatives, organizations can arrive at data-driven decisions where they can then allocate resources effectively and maximize the value of the AI investments.

By following a framework, organizations can effectively quantify the value of their AI initiatives to optimize performance and maximize returns, thus giving stakeholders confidence in what ROIs can be realized.

References

Computerworld – The ROI in AI (and how to find it)

https://www.computerworld.com/article/1612282/the-roi-in-ai-and-how-to-find-it.html

Mckinsey & Company – The state of AI in early 2024: Gen AI adoption spikes and starts to generate value

https://www.mckinsey.com/capabilities/quantumblack/our-insights/the-state-of-ai

Gartner – Take This View to Assess ROI for Generative AI

https://www.gartner.com/en/articles/take-this-view-to-assess-roi-for-generative-ai

Hylaine.com – Data Transformation: Paving the Way for AI Success in Your Organization